Difference between revisions of "Template:AI"

Ann Vincent (talk | contribs) m (→Automation Mode) |

Ann Vincent (talk | contribs) m |

||

| Line 14: | Line 14: | ||

==Interactive Mode== | ==Interactive Mode== | ||

| − | The AI interactive mode (also known as Work with +AI) can be enabled on organization profiles, user profiles, and UTA records. To learn more about this feature, read our page on [[ | + | The AI interactive mode (also known as Work with +AI) can be enabled on organization profiles, user profiles, and UTA records. To learn more about this feature, read our page on [[Work with +AI]]. To learn about a similar feature for internal users, read our page on our [[+AI Insights]] feature. |

==Using +AI with the Variable Processor== | ==Using +AI with the Variable Processor== | ||

| Line 48: | Line 48: | ||

||[[File:AI-Syntax-Prompt-Example-3.png|none|800px]] | ||[[File:AI-Syntax-Prompt-Example-3.png|none|800px]] | ||

|} | |} | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

Revision as of 12:06, 15 January 2024

The SmartSimple Cloud +AI integration gives you the ability to utilize large language models (LLM) from within our business process automation platform. LLMs may be utilized to improve productivity, processes, and outcomes. This article will walk-through how-to setup the integration, outline the two modes (automation and interactive), and delineate some sample scenarios.

Contents

Overview

There are two modes of operation when using SmartSimple Cloud +AI:

- Interactive Mode: This mode of operation occurs on a single object such as a grant application or review. In this scenario, the user can interact with the AI using a call-and-response model. The user might ask the AI to help them rewrite content, translate content into another language, or make content more concise. The user can ask questions (prompts) and follow up with more related questions. The user can also be presented with optional predefined templates to streamline common tasks.

- Automation Mode: This mode of operation can occur in various areas of the platform. In this scenario, the system is configured to automatically interact with your chosen 3rd party LLM vendor to do something with or without manual intervention. For example, the system can be configured to automatically generate an executive summary of an application or a summary of the reviewers’ comments. Applications could be prescreened and recommended or the AI could suggest reviewers with subject matter expertise related to the application.

Configuration - Essentials

Setting up +AI Integration

To get started setting up +AI integration, be sure to read our page on enabling +AI within the system.

Interactive Mode

The AI interactive mode (also known as Work with +AI) can be enabled on organization profiles, user profiles, and UTA records. To learn more about this feature, read our page on Work with +AI. To learn about a similar feature for internal users, read our page on our +AI Insights feature.

Using +AI with the Variable Processor

Configuring Automation Mode

Once +AI integration has been set up, the automation mode can be used in various areas of the system. The existing SmartSimple variable processor has been modified in order to allow you to interface with the third-party AI vendor. To familiarize yourself with the syntax and possible statements, you can test the syntax using the Variable Syntax Helper by opening a object record and going to Tools > Configuration Mode > Variable Syntax Helper.

Syntax

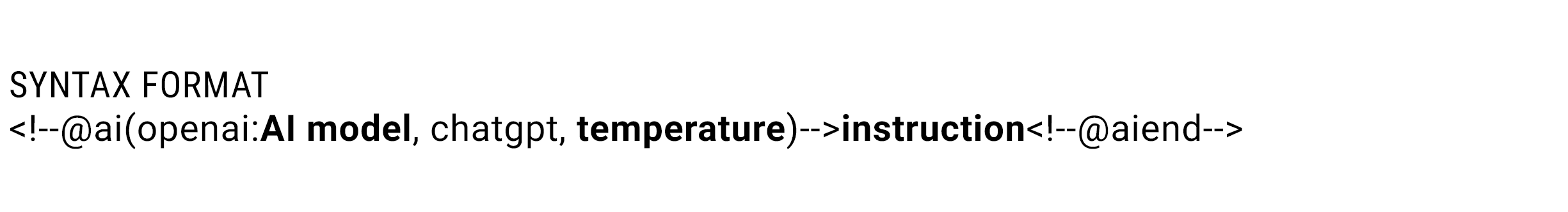

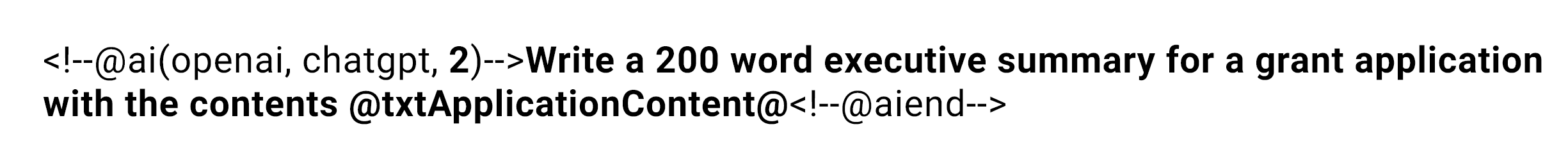

The syntax used for prompts in web page views and workflows is:

These parameters are explained as follows:

- AI model: (Optional) The name of the AI model being used by the service. If the service is OpenAI and the model is left unspecified, ChatGPT-3.5 will be used by default.

- temperature: Sets the desired level of randomness of the generated text, where a value of "0" is the most conservative while a value of "9" is the most random.

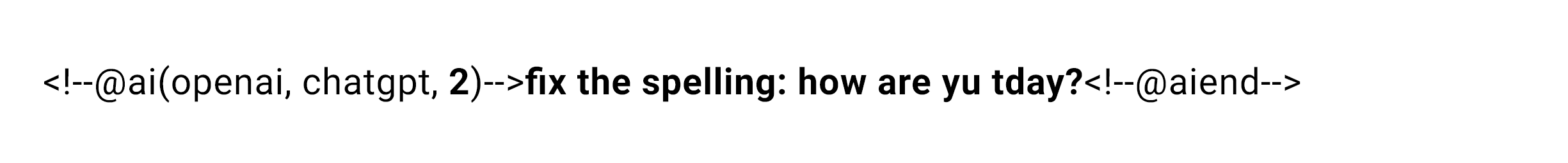

- instruction: The natural language prompt for the AI. For example, "fix the spelling: how are yu tday?" or "Translate the following into Japanese: hello, world!".

| Example Scenario | Syntax |

|---|---|

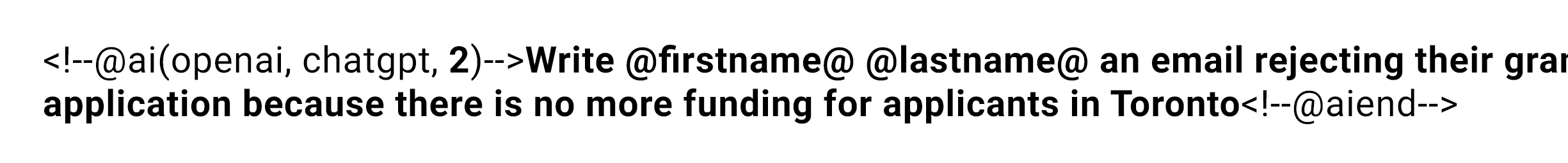

| Ask AI to write an email for a specific user | |

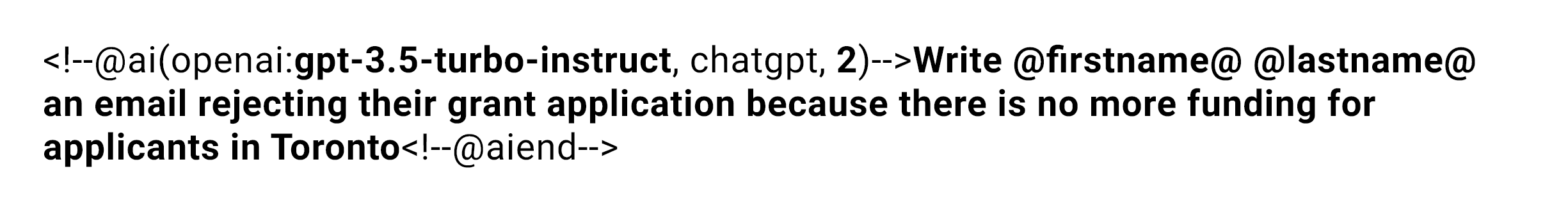

| Ask AI to write an email for a specific user using model gpt-3.5-turbo-instruct | |

| Ask AI to fix the spelling of a message | |

| Ask the AI to write a grant application summary |